Our comprehensive real estate solutions bolster the housing economy by putting innovative, intuitive, and integrated software and data platforms in the hands of real estate professionals—helping them increase productivity, deepen loyalty, and future proof against shifting marketing challenges.

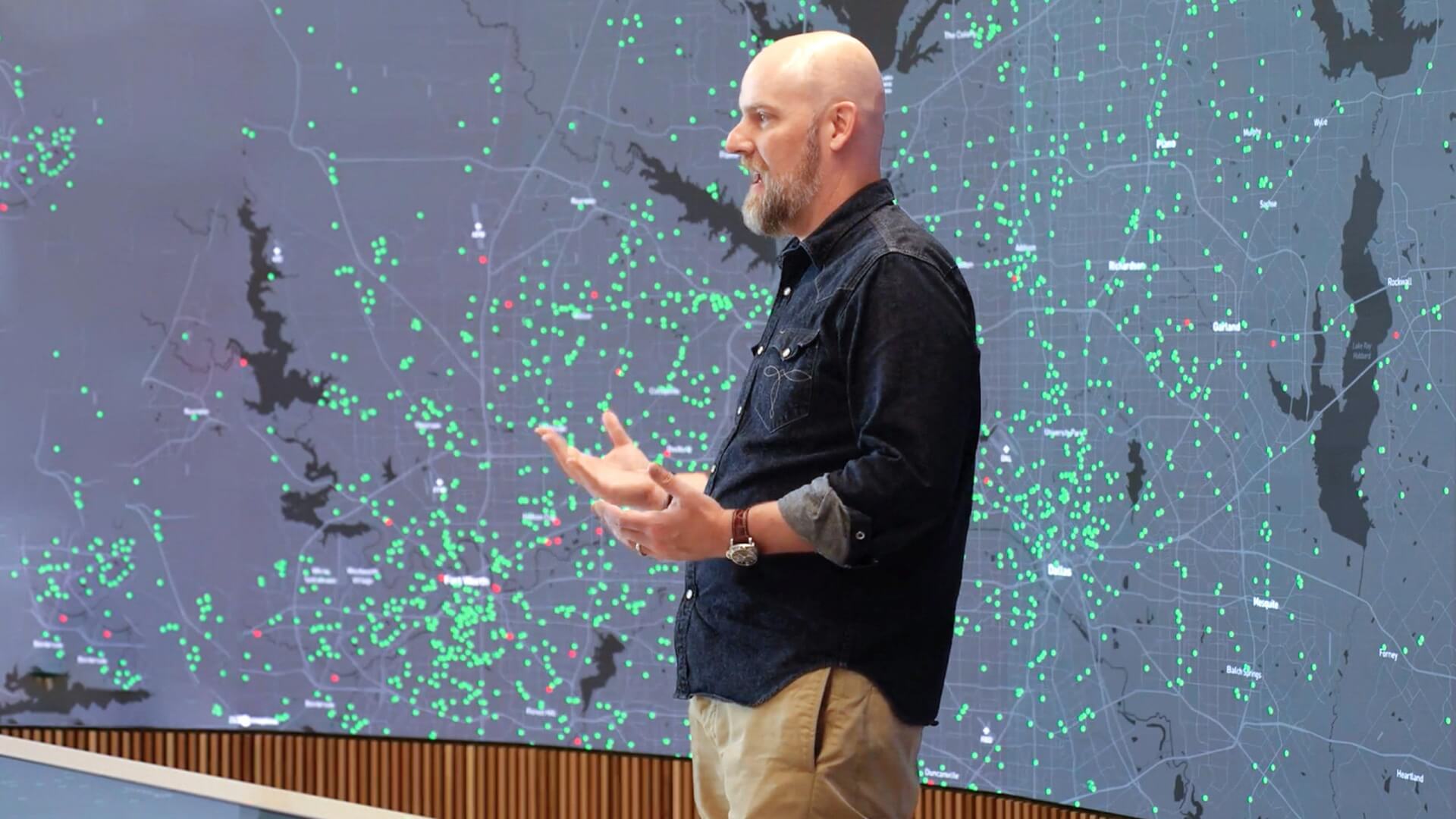

CoreLogic’s team of economists, data scientists and housing market professionals interpret trends, provide analysis and forecast the direction of the housing market.

North America’s most prominent multiple listing organizations trust CoreLogic® to power, manage and secure the data that make the home finding and selling experience possible.

Our innovative, intuitive, industry-leading tools help real estate professionals attract more leads, win more listings, and sell more homes.

CoreLogic solutions are versatile. Contact us to start a conversation.

CoreLogic helps diverse businesses and organizations grow revenue, fast track innovations and migrate risk with category-defying data, insights and analytics.

CoreLogic solutions automate and streamline the mortgage process; originate loans faster, improve borrower experiences, and helps lenders manage risk more effectively.

CoreLogic seamlessly connects the entire insurance process from quote to underwriting, to claims and risk management through our secure digital workflow.